Questions RecOps Professionals Need to Ask When Assessing AI Ethics by Jeffrey Pole of Warden AI

This article is a guest piece by Jeffrey Pole of Warden AI

The rapid adoption of AI in RecOps has transformed the way talent acquisition (TA) teams operate.

With 3 in 4 companies incorporating AI into their hiring processes, its impact on recruitment is undeniable. However, while AI enhances efficiency and expands talent pools, it also introduces significant ethical and compliance challenges.

While AI tools have the power to improve hiring efficiency, boost job post effectiveness, and even expand the talent pool, they also introduce significant compliance and ethical considerations.

Here are 6 questions RecOp professionals should be asking when it comes to assessing an AI system they want to use in recruitment.

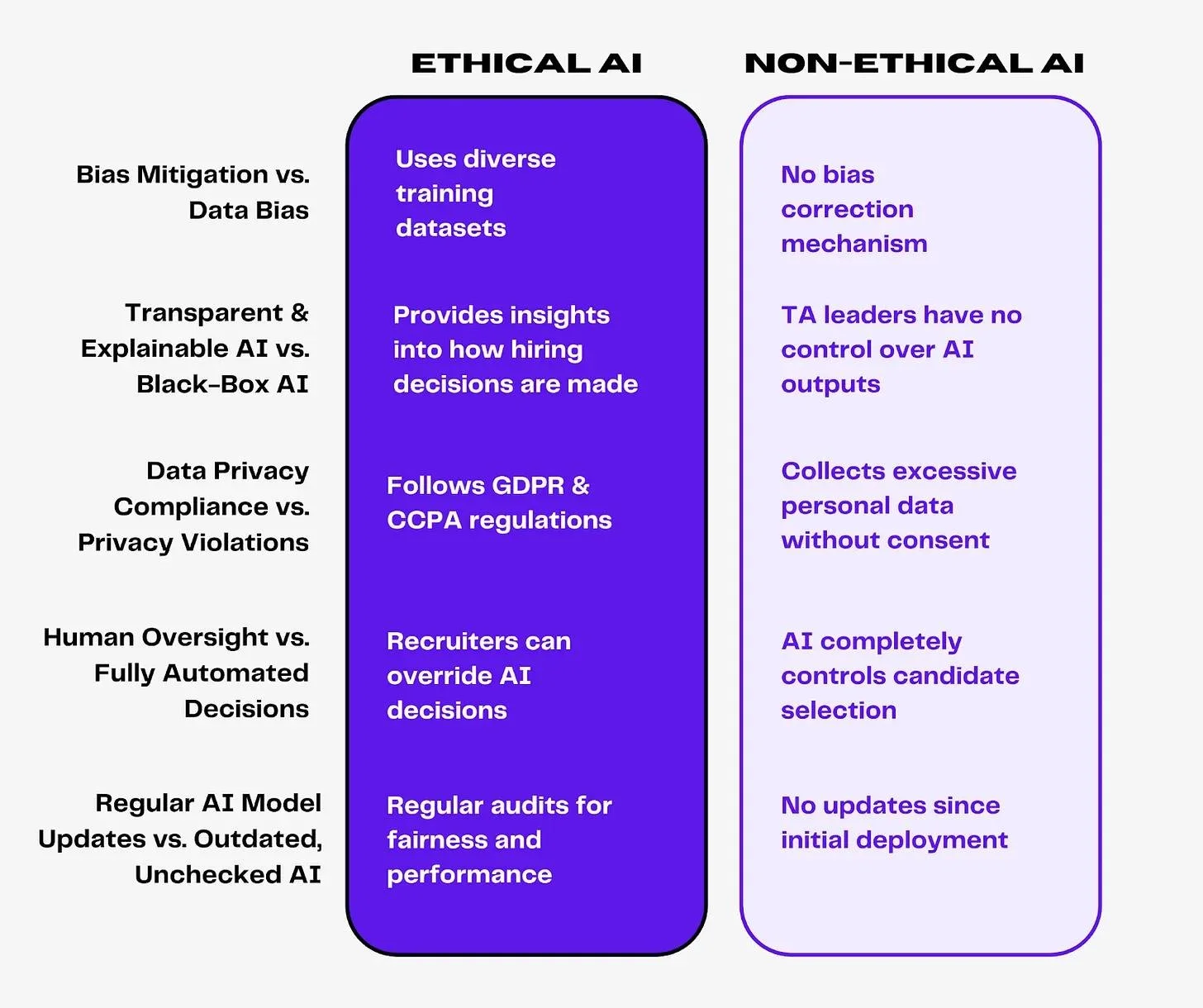

1. How does the AI model mitigate bias?

One of the biggest concerns when assessing the effectiveness and ethics of an AI tool is bias. AI models are trained on historical data, which may contain systemic biases.

RecOps teams should remain vigilant about the potential for bias and ensure to ask vendors these three things:

What datasets were used to train the AI?

Do you conduct an AI bias audit, and if so, what is the frequency of those audits?

Have you analyzed the training dataset for representation across different demographic groups?

2. Is the AI model explainable and transparent?

Black-box AI models can make it difficult to understand why a particular candidate was recommended or rejected.

Transparency is critical for compliance and trust. Consider these questions:

Can the vendor provide insights into how the AI reaches its conclusions?

How do you evaluate for the truthfulness of statements made by the AI?

Are candidates informed when AI is used in the hiring process?

Pro tip: AI vendors usually have what is called an “AI Explainability" document. This is usually a report or statement of some kind that is essentially a “peek under the hood” when it comes to how their AI operates.

3. Does the AI tool comply with data privacy regulations?

Data privacy laws like GDPR and CCPA have strict guidelines on candidate data collection and processing. When evaluating AI vendors, ask:

What data is collected, and how is it stored?

Does the system anonymize or encrypt sensitive candidate information?

4. What are the legal risks associated with the AI tool?

AI-driven hiring tools must comply with anti-discrimination laws and employment regulations (examples of these include Civil Rights Act (US), Equality Act (UK), EU AI Act, Colorado SB 205, NYC Local Law 144). To mitigate legal risks, ask the following:

Has the tool undergone third-party compliance assessments?

How does the system handle adverse impact analysis?

5. How adaptable is the AI tool to your specific needs?

No two organizations have the same hiring needs. Before implementation, verify the flexibility of the AI solution:

Can the tool be customized to align with your company's hiring criteria?

Does it integrate seamlessly with existing HR tech stacks (ATS, HRIS, CRM)?

6. How frequently is the AI model updated?

AI models require ongoing updates to remain effective and compliant. Ensure the vendor provides:

Regular model updates to align with changing hiring laws and market conditions.

Continuous monitoring to address bias, errors, and performance issues.

Final Thoughts

74% of TA professionals agree that AI will transform the way organizations will hire. And if a team is building models in-house, the same best practices still apply. By asking the right questions, teams can make informed decisions that protect both candidates and their organizations.